About a year and a half ago I started showing off UHFind, my web-app that made it easier to search for classes at the University of Hawaii.

I started it around 2009 as a junior in college

and used it to help myself (and hopefully others) graduate on time. I continued

improving the project for fun well after graduation by making it faster, adding

data from the UH community college system, and allowing for more advanced queries.

It’s time for me to move on to other personal projects and I want to write this post as a way to recap some of the interesting technical challenges I faced.

This first post is how I retrieved the UH course data without official database access. I might write about other aspects of the project as time goes on, but let’s play it by ear.

The Problem

Like most colleges, the University of Hawaii requires its students to complete courses that satisfy certain requirements in order to graduate, such as those designated as writing-intensive, pertaining to ethics, or with an oral-communications focus.

The university course listings were given as a listing of tables. There was no way to search for classes that met graduation requirements, so I thought to build something to solve this.

It would be relatively simple to implement a filter for the classes, but how would I get the data in the first place? Without database access, I resorted to writing a spider that would visit each page in the UH catalog, parse out the HTML, and return the data in a format I could work with.

Scraping is hard

HTML for one course looked something like this:

<tr>

<td class="default">DY</td>

<td class="default"><a href="./avail.class?i=MAN&t=201530&c=80395">80395</a></td>

<td nowrap="" class="default">CHEM 161L</td>

<td class="default">006</td>

<!-- ssrmeet_array_length = 0 -->

<!-- ssrmeet_array_length = 1 -->

<td class="default">General Chemistry Lab I</td>

<td class="default">1</td>

<td class="default"><abbr title="Stephen Margosiak"><span class="abbr" title="Stephen Margosiak">S Margosiak</span></abbr></td>

<td class="default" align="center">3</td>

<td class="default">M</td>

<td nowrap="" class="default">0130-<spacer>0420p</spacer></td>

<td class="default"><abbr title="Bilger Hall Addition Room 105"><span class="abbr" title="Bilger Hall Addition Room 105">BILA 105</span></abbr></td>

<td class="default">01/12-05/15</td>

</tr>

The worst part about this was that sometimes classes would add extra rows or columns so I wouldn’t always be able to count on a particular data field always being located at the same line index.

Attempt 1: UHFind the Java applet

But I pressed on. Back then the only programming language I knew was Java, so I wrote the tool as a Java applet (lol). I look at this code and laugh at the 179 massively ugly lines of code that I wrote to parse it.

Here’s a hilarious little gem:

// temp_ascii_storage contains all the lines of the HTML file

for (int i = 0; i < temp_ascii_storage.size(); i++) {

String currentLine = temp_ascii_storage.get(i);

String prevLine = i == 0 ? temp_ascii_storage.get(0) : temp_ascii_storage.get(i - 1);

if (CRN.matcher(currentLine).matches()) {

courseNum = currentLine;

if (prevLine.contains("FGA") || prevLine.contains("DA") // <...snip...>

) {

focus = prevLine;

} else {

focus = "none";

}

}

// <...about 160 more lines of programming atrocities...>

}

Yup. I am going line by line and trying to determine what data field each line is. This is hardcoding at its finest. Of course this is going to be an absolute mess to maintain, but hey, this parser worked once and that was good enough for me.

The worst thing about parsing the catalog this way was that it was very unpredictable and I ended up hardcoding a lot of edgecases. It wasn’t until I decided to try something different around 2013 when I had more success.

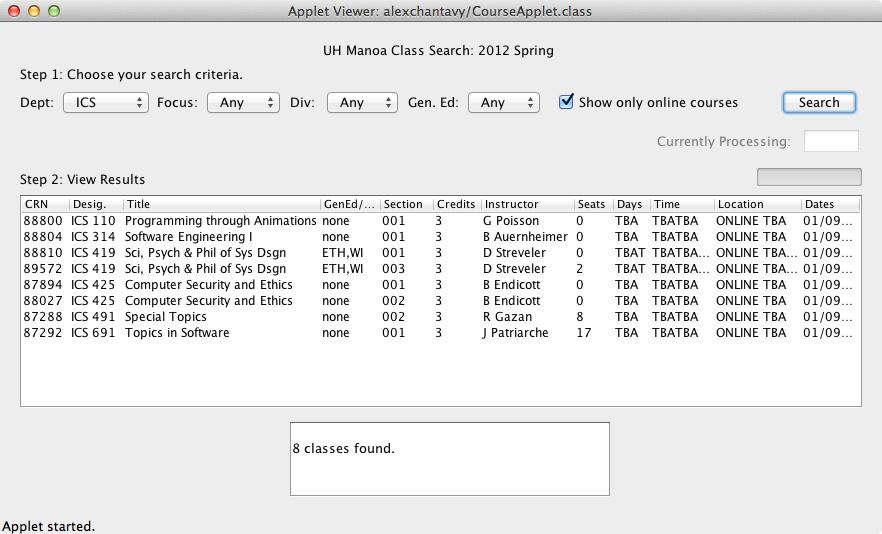

The applet itself wasn’t that bad… except for the fact that it was a Java applet. Here’s a screenshot from 2012 when I was trying to pick the project back up again.

Attempt 2: Enter phantom

Even though I had already been out of school two years by 2013, it always bugged me that I didn’t do all that I wanted to do with UHFind. I was interested in web dev and needed a project to get myself going. The first step was to figure out a smarter way to scrape the school website.

phantom.js is a headless browser powered by the WebKit engine and it’s promoted as an automated test tool for web frontends. It also can “Access and manipulate webpages with the standard DOM API”.

This meant that instead of adding hardcoded checks for each line of HTML, I

could instead use phantom to manipulate the DOM elements of the page directly

and access the text! phantom essentially gave me a CLI to run JavaScript code that could

run in a “real” browser’s web console, including really helpful functions like document.querySelectorAll().

My new web scraper was a lot easier to manage and maintain. Here’s a snippet:

// get the course table back as a DOM object

var rows = document.querySelectorAll('table.listOfClasses tr');

var catalog = [];

var row_len = rows.length;

// Iterate through the <tr>s starting at index 2 to skip the header rows.

for (var i = 2; i < row_len; i++) {

var course = {};

course.campus = campus;

course.genEdFocus = [];

// <...snipping out some edge cases here for clarity...>

// check for ` ` using char code 0xA0

course.genEdFocus = (rows[i].cells[0].textContent == '\xA0') ?

[] :

rows[i].cells[0].textContent.split(',');

course.crn = rows[i].cells[1].textContent;

course.course = rows[i].cells[2].textContent;

course.sectionNum = rows[i].cells[3].textContent;

course.title = rows[i].cells[4].textContent;

course.credits = rows[i].cells[5].textContent;

course.instructor = (rows[i].cells[6].textContent == 'TBA') ?

'TBA' :

// get only the prof's last name

rows[i].cells[6].textContent.substring(2);

// <...>

catalog.push( course );

}

As you see here, I’m able to directly access the DOM object and save it to a JavaScript object. That ugly HTML page becomes a manageable JSON array, ready to be placed into the database of my choosing - a topic I might explore in a future post.

[{

"campus": "MAN",

"genEdFocus": [

"DY"

],

"crn": "80395",

"course": "CHEM 161L",

"sectionNum": "006",

"title": "General Chemistry Lab I",

"credits": "1",

"instructor": "Margosiak",

"waitListed": null,

"waitAvail": null,

"mtgTime": [

{

"days": [

"M"

],

"start": "0130",

"end": "0420p",

"loc": "BILA 105",

"dates": "01/12-05/15"

}

]

}, {"etc": "..."}]

Gorgeous.

And that’s how I scraped my university’s course catalog. phantom is a really

great tool. This seems like a good stopping point for this post. At some point I’ll try to also write about about using node to power the site’s backend or

getting around some of phantom‘s bugs.